LAN Ethernet Maximum Rates, Generation, Capturing & Monitoring

Introduction

Designing and managing an IP network requires an in-depth understanding of both the network infrastructure and the performance of devices that are attached, including how packets are handled by each network device. Network computing engineers typically refer to the performance of network devices by using the speed of the interfaces expressed in bits per second (bps). For example, a network device may be described as having a performance of 10 gigabits per second (Gbps). Although this is useful and important information, expressing performance in terms of bps alone does not adequately cover other important network device performance metrics. Determining effective data rates for varying Ethernet packets sizes can provide vital information and a more complete understanding of the characteristics of the network.

It is the intent of this article to measure maximum LAN Ethernet rates values that can be achieved for Gigabit Ethernet using the TCP/IP or the UDP network protocols. A stepwise approach on how to generate, capture and monitor maximum Ethernet rates will be shown using various tools bundled with the Network Security Toolkit (NST). We will follow many of the methods described in RFC 2544 "Benchmarking Methodology for Network Interconnect Devices" to perform benchmark tests and performance measurements.

A demonstration and discussion on how Linux segmentation off-loading for supported NIC adapters can internally produce Jumbo Super Ethernet Frames will be presented. These large Ethernet frames can be captured and decoded by the network protocol analyzer. The use of segmentation off-loading can result in increased network performance and less CPU overhead when processing network traffic. Finally, a section on Ethernet flow control (IEEE 802.3x) will be illustrated and its effects during high data transfer rates will be revealed through demonstration.

Ethernet Maximum Rates

Ethernet Background Information

To get started, some background information is appropriate. Communication between computer systems using TCP/IP takes place through the exchange of packets. A packet is a PDU (Protocol Data Unit) at the IP layer. The PDU at the TCP layer is called a segment while a PDU at the data-link layer (such as Ethernet) is called a frame. However the term packet is generically used to describe the data unit that is exchanged between TCP/IP layers as well as between two computers.

First, one needs to know the maximum performance of the network environment to establish a baseline value. We will be using a Switched Gigabit Ethernet (IEEE 802.3ab) network configuration. We chose this configuration because it is a common network topology used in today's enterprise LAN environments.

Frames per second (fps), Packets per second (pps) or Bits per second (bps) are a usual methods of rating the throughput performance of a network device. Understanding how to calculate these rates can provide extensive insight on how Ethernet systems function and will help assist in network architecture design.

The following sections show the theoretical maximum frames per second that can be achieved using both Fast Ethernet (100 Mb/sec) and Gigabit Ethernet (1000 Mb/sec) for the TCP/IP and UDP network protocols. Today's network switches and host systems configured with commodity based network NIC adapters allow many of these theoretical limits to be reached.

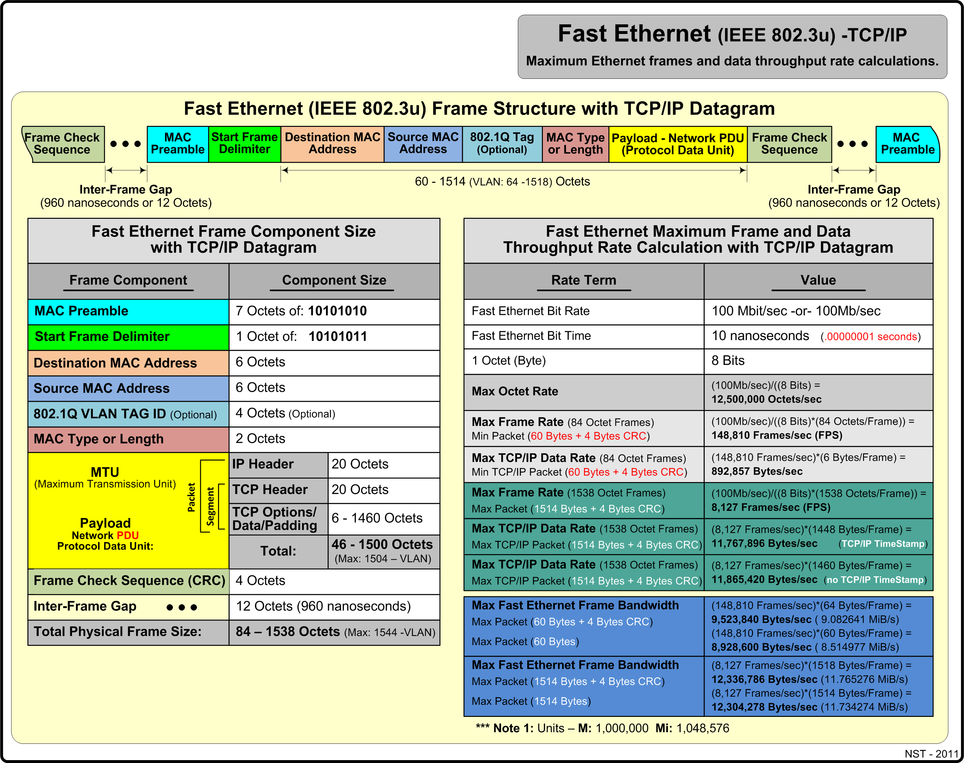

Fast Ethernet Using TCP/IP

The diagram below presents maximum Fast Ethernet (IEEE 802.3u) metrics and reference values for the TCP/IP network protocol using minimum and maximum payload sizes.

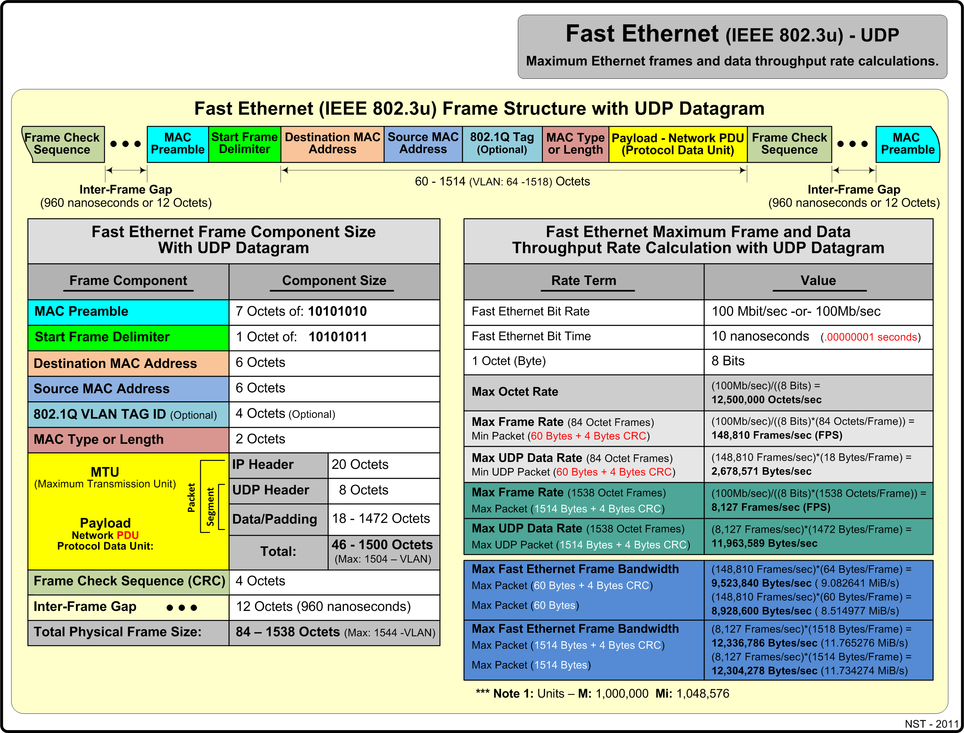

Fast Ethernet Using UDP

The diagram below presents maximum Fast Ethernet (IEEE 802.3u) metrics and reference values for the UDP network protocol using minimum and maximum payload sizes.

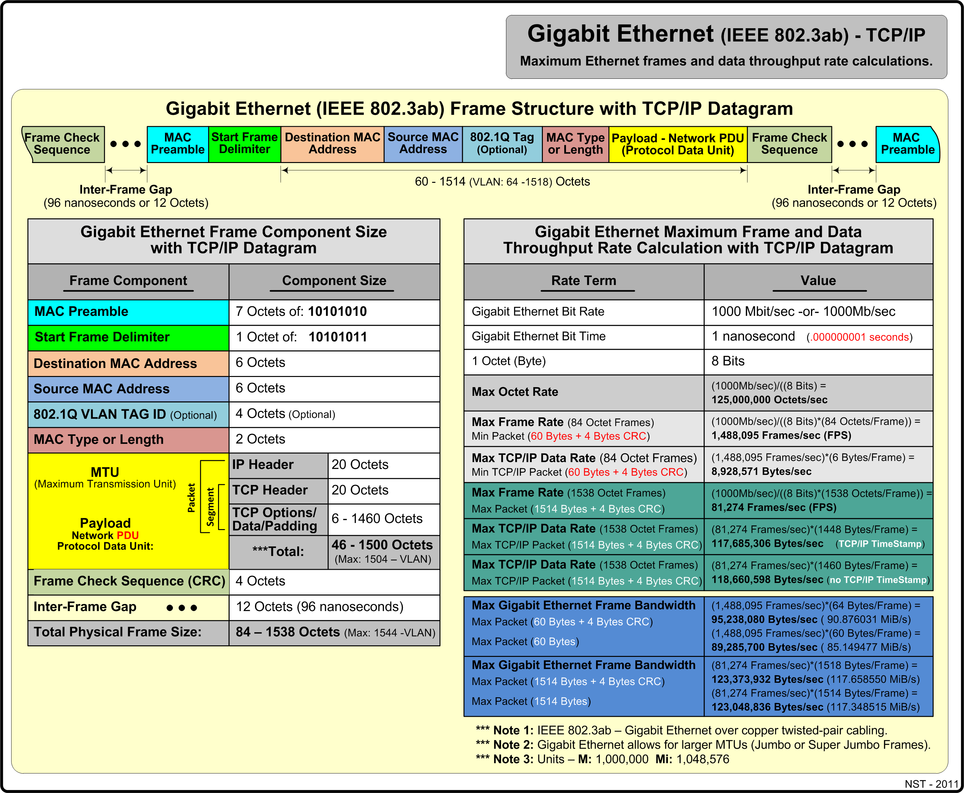

Gigabit Ethernet Using TCP/IP

The diagram below presents maximum Gigabit Ethernet (IEEE 802.3ab) metrics and reference values for the TCP/IP network protocol using minimum and maximum payload sizes.

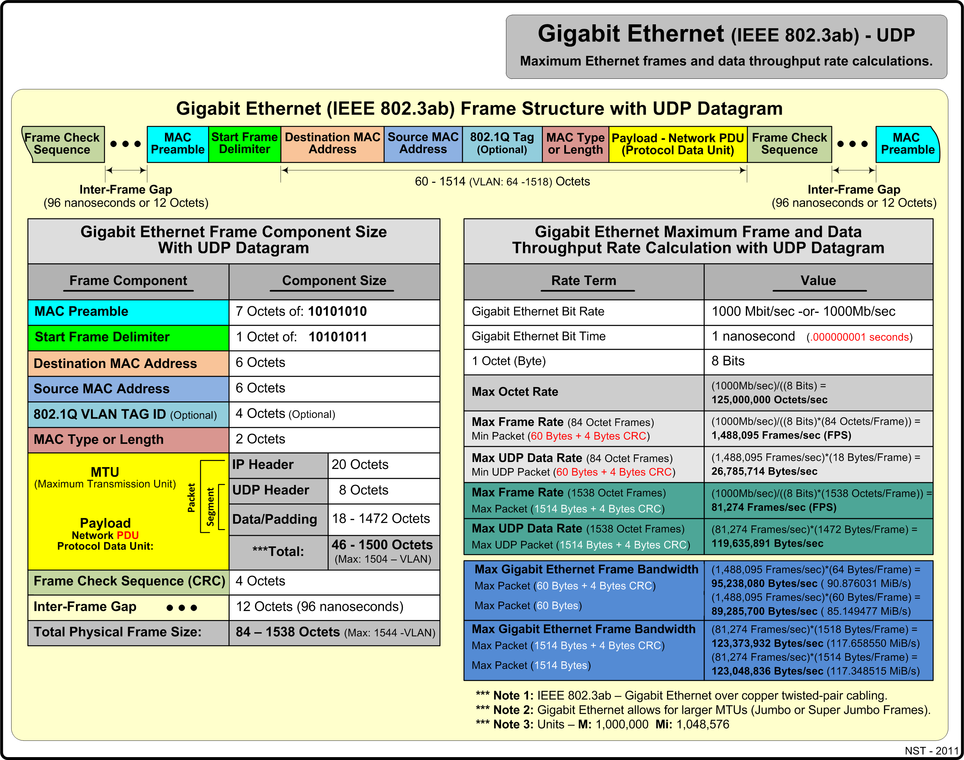

Gigabit Ethernet Using UDP

The diagram below presents maximum Gigabit Ethernet (IEEE 802.3ab) metrics and reference values for the UDP network protocol using minimum and maximum payload sizes.

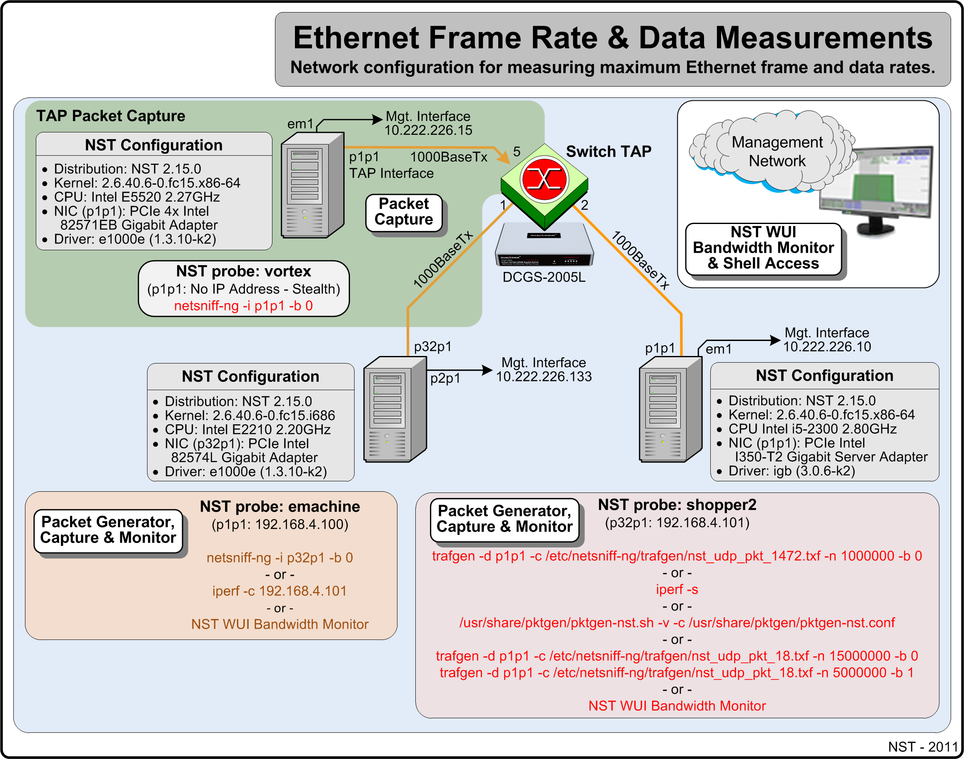

Ethernet Frame Rate & Data Measurements Network Configuration

The Gigabit network diagram below is used as the reference network for bandwidth performance measurements made throughout this article. Each system shown below is a commodity based system that can be purchased at a local retailer. The emachine and the shopper2 system are configured as NST probes and attached to the Gigabit Ethernet network via a Dualcomm DGCS-2005L Gigabit Switch TAP. The shopper2 system is using the next generation Intel Gigabit Ethernet Server Adapter: "I350-T2". Port: 5 of the Gigabit Switch TAP can be used as a SPAN port for system vortex to perform network packet capture on interface: "p1p1". System configurations, functionality and network performance measurement tools with command line arguments are also displayed. A separate out-of-band management network is configured for NST WUI usage and Shell command line access.

Packet Generators, Packet Capture Tools & Network Monitors

Networking tools used in this article for performance bandwidth measurements will now be explained. Each tool described is bundled with the NST distribution.

Packet Generators

pktgen

pktgen is a Linux packet generator that can produce network packets at very high speed in the kernel. The tool is implemented as a Linux Kernel module and is directly included in the core networking subsystem of the Linux Kernel distribution. NST has a supporting pktgen RPM package including a front-end wrapper script: "/usr/share/pktgen/pktgen-nst.sh" and starter configuration file: "/usr/share/pktgen/pktgen-nst.conf" for easy setup and usage. The help document for the script is shown:

pktgen-nst.sh <[-h | --help] |

[-i | --module-info] |

[-k | --kernel-thread-status] |

[-n | --nic-status [network interface]] |

[[-v | --verbose] -l | --load-pktgen] |

[[-v | --verbose] -u | --unload-pktgen] |

[[-v | --verbose] -r | --reset-pktgen] |

[[-v | --verbose] -c | --conf <pktgen-nst.conf>]>

The pktgen network packet generator is used in this article to help measure maximum Gigabit Ethernet frame rates.

iperf

iperf is a tool to measure maximum TCP bandwidth performance, allowing the tuning of various parameters and UDP characteristics. iperf reports bandwidth, delay jitter and datagram loss. iperf runs as either a client or server with all options configured on the command line. The help document for iperf is shown:

Usage: iperf [-s|-c host] [options]

iperf [-h|--help] [-v|--version]

Client/Server:

-f, --format [kmKM] format to report: Kbits, Mbits, KBytes, MBytes

-i, --interval # seconds between periodic bandwidth reports

-l, --len #[KM] length of buffer to read or write (default 8 KB)

-m, --print_mss print TCP maximum segment size (MTU - TCP/IP header)

-o, --output <filename> output the report or error message to this specified file

-p, --port # server port to listen on/connect to

-u, --udp use UDP rather than TCP

-w, --window #[KM] TCP window size (socket buffer size)

-B, --bind <host> bind to <host>, an interface or multicast address

-C, --compatibility for use with older versions does not sent extra msgs

-M, --mss # set TCP maximum segment size (MTU - 40 bytes)

-N, --nodelay set TCP no delay, disabling Nagle's Algorithm

-V, --IPv6Version Set the domain to IPv6

Server specific:

-s, --server run in server mode

-U, --single_udp run in single threaded UDP mode

-D, --daemon run the server as a daemon

Client specific:

-b, --bandwidth #[KM] for UDP, bandwidth to send at in bits/sec

(default 1 Mbit/sec, implies -u)

-c, --client <host> run in client mode, connecting to <host>

-d, --dualtest Do a bidirectional test simultaneously

-n, --num #[KM] number of bytes to transmit (instead of -t)

-r, --tradeoff Do a bidirectional test individually

-t, --time # time in seconds to transmit for (default 10 secs)

-F, --fileinput <name> input the data to be transmitted from a file

-I, --stdin input the data to be transmitted from stdin

-L, --listenport # port to receive bidirectional tests back on

-P, --parallel # number of parallel client threads to run

-T, --ttl # time-to-live, for multicast (default 1)

-Z, --linux-congestion <algo> set TCP congestion control algorithm (Linux only)

Miscellaneous:

-x, --reportexclude [CDMSV] exclude C(connection) D(data) M(multicast) S(settings) V(server) reports

-y, --reportstyle C report as a Comma-Separated Values

-h, --help print this message and quit

-v, --version print version information and quit

[KM] Indicates options that support a K or M suffix for kilo- or mega-

The TCP window size option can be set by the environment variable

TCP_WINDOW_SIZE. Most other options can be set by an environment variable

IPERF_<long option name>, such as IPERF_BANDWIDTH.

Report bugs to <iperf-users@lists.sourceforge.net>

The iperf network packet generator is used in this article help measure maximum Gigabit Ethernet data rates.

trafgen

trafgen is a zero-copy high performance network packet traffic generator utility that is part of the netsniff-ng networking toolkit. trafgen requires a packet configuration file which defines the characteristic of the network protocol packets to generate. NST bundles two (2) configuration files for UDP packet generation with trafgen. One configuration file is for a minimum UDP payload of 18 bytes (/etc/netsniff-ng/trafgen/nst_udp_pkt_18.txf) which produces a minimum UDP packet of "60 Bytes". The other configuration file is for a maximum UDP payload of 1472 bytes (/etc/netsniff-ng/trafgen/nst_udp_pkt_1472.txf) which produces a maximum UDP packet of "1514 Bytes". The help document for trafgen is shown:

trafgen 0.5.6.0, network packet generator

http://www.netsniff-ng.org

Usage: trafgen [options]

Options:

-d|--dev <netdev> Networking Device i.e., eth0

-c|--conf <file> Packet configuration file

-J|--jumbo-support Support for 64KB Super Jumbo Frames

Default TX slot: 2048Byte

-n|--num <uint> Number of packets until exit

`-- 0 Loop until interrupt (default)

`- n Send n packets and done

-r|--rand Randomize packet selection process

Instead of a round robin selection

-t|--gap <int> Interpacket gap in us (approx)

-S|--ring-size <size> Manually set ring size to <size>:

mmap space in KB/MB/GB, e.g. '10MB'

-k|--kernel-pull <int> Kernel pull from user interval in us

Default is 10us where the TX_RING

is populated with payload from uspace

-b|--bind-cpu <cpu> Bind to specific CPU (or CPU-range)

-B|--unbind-cpu <cpu> Forbid to use specific CPU (or CPU-range)

-H|--prio-high Make this high priority process

-Q|--notouch-irq Do not touch IRQ CPU affinity of NIC

-v|--version Show version

-h|--help Guess what?!

Examples:

See trafgen.txf for configuration file examples.

trafgen --dev eth0 --conf trafgen.txf --bind-cpu 0

trafgen --dev eth0 --conf trafgen.txf --rand --gap 1000

trafgen --dev eth0 --conf trafgen.txf --bind-cpu 0 --num 10 --rand

Note:

This tool is targeted for network developers! You should

be aware of what you are doing and what these options above

mean! Only use this tool in an isolated LAN that you own!

Please report bugs to <bugs@netsniff-ng.org>

Copyright (C) 2011 Daniel Borkmann <dborkma@tik.ee.ethz.ch>,

Swiss federal institute of technology (ETH Zurich)

License: GNU GPL version 2

This is free software: you are free to change and redistribute it.

There is NO WARRANTY, to the extent permitted by law.

The trafgen network packet generator is used in this article to help measure both maximum Gigabit Ethernet data rates and maximum Gigabit Ethernet frame rates.

packETH

packETH is a Linux GUI packet generator tool for ethernet. The tool was used to help construct the UDP packet configuration files for the trafgen packet generator.

Packet Capture Tools

netsniff-ng

netsniff-ng is a zero-copy high performance Linux based network protocol analyzer that is part of the netsniff-ng networking toolkit. The zero-copy mechanism prevents the copy of network packets from Kernel space to User space and vice versa. netsniff-ng supports the pcap file format for capturing, replaying or performing offline-analysis of pcap file dumps. Gigabit Ethernet wire speed packet capture can be accomplished with this tool at maximum data rates and is demonstrated in this article. The help document for netsniff-ng is shown:

netsniff-ng 0.5.6.0, the packet sniffing beast

http://www.netsniff-ng.org

Usage: netsniff-ng [options]

Options:

-i|-d|--dev|--in <dev|pcap> Input source as netdev or pcap

-o|--out <dev|pcap> Output sink as netdev or pcap

-f|--filter <bpf-file> Use BPF filter file from bpfc

-t|--type <type> Only handle packets of defined type:

host|broadcast|multicast|others|outgoing

-s|--silent Do not print captured packets

-J|--jumbo-support Support for 64KB Super Jumbo Frames

Default RX/TX slot: 2048Byte

-n|--num <uint> Number of packets until exit

`-- 0 Loop until interrupt (default)

`- n Send n packets and done

-r|--rand Randomize packet forwarding order

-M|--no-promisc No promiscuous mode for netdev

-m|--mmap Mmap pcap file i.e., for replaying

Default: scatter/gather I/O

-c|--clrw Instead s/g I/O use slower read/write I/O

-S|--ring-size <size> Manually set ring size to <size>:

mmap space in KB/MB/GB, e.g. '10MB'

-k|--kernel-pull <int> Kernel pull from user interval in us

Default is 10us where the TX_RING

is populated with payload from uspace

-b|--bind-cpu <cpu> Bind to specific CPU (or CPU-range)

-B|--unbind-cpu <cpu> Forbid to use specific CPU (or CPU-range)

-H|--prio-high Make this high priority process

-Q|--notouch-irq Do not touch IRQ CPU affinity of NIC

-q|--less Print less-verbose packet information

-l|--payload Only print human-readable payload

-x|--payload-hex Only print payload in hex format

-C|--c-style Print full packet in trafgen/C style hex format

-X|--all-hex Print packets in hex format

-N|--no-payload Only print packet header

-v|--version Show version

-h|--help Guess what?!

Examples:

netsniff-ng --in eth0 --out dump.pcap --silent --bind-cpu 0

netsniff-ng --in dump.pcap --mmap --out eth0 --silent --bind-cpu 0

netsniff-ng --in any --filter icmp.bpf --all-hex

netsniff-ng --in eth0 --out eth1 --silent --bind-cpu 0\

--type host --filter http.bpf

Note:

This tool is targeted for network developers! You should

be aware of what you are doing and what these options above

mean! Use netsniff-ng's bpfc compiler for generating filter files.

Further, netsniff-ng automatically enables the kernel BPF JIT

if present.

Please report bugs to <bugs@netsniff-ng.org>

Copyright (C) 2009-2011 Daniel Borkmann <daniel@netsniff-ng.org>

Copyright (C) 2009-2011 Emmanuel Roullit <emmanuel@netsniff-ng.org>

License: GNU GPL version 2

This is free software: you are free to change and redistribute it.

There is NO WARRANTY, to the extent permitted by law.

The netsniff-ng packet capture protocol ananlyzer is used in this article to help measure both maximum Gigabit Ethernet data rates and maximum Gigabit Ethernet frame rates.

Network Monitors

NST Network Interface Bandwidth Monitor

The NST Network Interface Bandwidth Monitor is an interactive dynamic SVG/AJAX enabled application integrated into the NST WUI for monitoring Network Bandwidth usage on each configured network interface in pseudo real-time. This monitor reads network interface data derived from the Kernel Proc file: "/proc/net/dev". The displayed graph and computed bandwidth data rates are extremely accurate due to minimum received packet loss since data is read from Kernel space.

The Bandwidth Monitor Ruler Measurement tool is used extensively for pinpoint data rate calculations, time duration measurements and for packet counts and rates.

Maximum Gigabit Ethernet Data Rate Measurement

Both the UDP and TCP/IP network protocols will be used to show how to generate, capture and monitor maximum Gigabit Ethernet data rates. The effects of using "Receiver Segmentation Offloading" with TCP/IP packets will also be demonstrated.

trafgen: UDP 1514 Byte Packets

In this section we will demonstrate the use of the trafgen packet generator tool to produce a Gigabit Ethernet UDP stream of packets at the maximum possible data rate of "117 MiB/s". trafgen is run on the shopper2 NST probe using network interface: "p1p1" and the receiving NST probe emachine is capturing the UDP stream with netsniff-ng on network interface: "p32p1".

The caption below shows the results of running the trafgen command using the NST provided configuration file containing a UDP packet payload of 1472 bytes (i.e., All ASCII character "A"s (Hex 0x41)). The UDP source port is 2000 (0x70, 0xd0) and the destination port is 2001 (0x70, 0xd1). For the standard Ethernet 1500 Byte MTU, the maximum UDP payload size is "1472 Bytes". In this demonstration 1,000,000 packets were generated. For maximum performance we have bound the trafgen process to CPU: "0".

trafgen 0.5.6.0 CFG: n 1000000, gap 0 us, pkts 1 [0] pkt len 1514 cnts 0 rnds 0 payload ff ff ff ff ff ff fe 00 00 00 00 00 08 00 45 00 05 ce 12 34 40 00 ff 11 d9 d0 c0 a8 04 64 c0 a8 04 65 07 d0 07 d1 05 ba 87 ec 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 41 TX: 238.41 MB, 122064 Frames each 2048 Byte allocated IRQ: p32p1:46 > CPU0 MD: FIRE RR 10us Running! Hang up with ^C! 1000000 frames outgoing 1514000000 bytes outgoing [root@shopper2 tmp]#

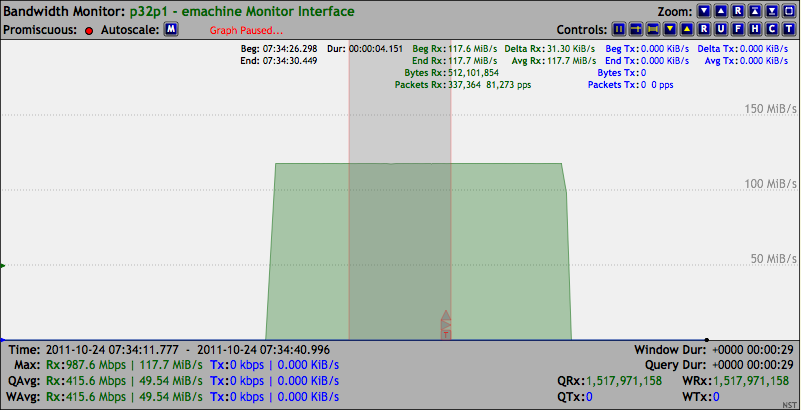

On the receiving emachine system we can observe from the results of the Bandwidth Monitor application below that the maximum Gigabit Ethernet data rate of "117.7 MiB/s (81,273 pps)" was sustained for the duration of sending out 1,000,000 UDP packets. In this case, the NST WUI was rendered on a browser in the management network from running the Bandwidth Monitor application on the emachine. The Bandwidth Monitor was configured for a data query period of 200 msec.

The Bandwidth Monitor Ruler Measurement tool was opened up to span across the entire packet generation session for "12.853 seconds" in duration and revealed that exactly 1,000,000 packets were received. The reason why the number of Bytes seen on the emachine is greater than the number sent from the output of trafgen (i.e., 1,514,000,000 Bytes) is because the NIC adapter in conjunction with the e1000e driver includes counting CRC Bytes.

The netsniff-ng protocol analyzer shown below captured exactly 1,000,000 packets on network interface: "p1p1" and store the results in pcap format to file: "/dev/shm/c1.pcap".

The capture file size was: "1,530,000,024 Bytes".

netsniff-ng 0.5.6.0 RX: 238.41 MB, 122064 Frames each 2048 Byte allocated OUI UDP TCP ETH IRQ: p1p1:48 > CPU0 PROMISC BPF: (000) ret #-1 MD: RX SCATTER/GATHER 1000000 frames incoming 1000000 frames passed filter 0 frames failed filter (out of space) [root@emachine shm]#

/dev/shm [root@emachine shm]#

total 1497072 drwxrwxrwt 2 root root 60 Oct 24 06:19 . drwxr-xr-x 22 root root 4100 Oct 24 05:55 .. -rw------- 1 root root 1530000024 Oct 24 07:34 c1.pcap [root@emachine shm]#

File name: ./c1.pcap File type: Wireshark/tcpdump/... - libpcap File encapsulation: Ethernet Packet size limit: file hdr: 65535 bytes Number of packets: 1000000 File size: 1530000024 bytes Data size: 1514000000 bytes Capture duration: 12 seconds Start time: Mon Oct 24 07:34:22 2011 End time: Mon Oct 24 07:34:35 2011 Data byte rate: 123044025.00 bytes/sec Data bit rate: 984352199.97 bits/sec Average packet size: 1514.00 bytes Average packet rate: 81270.82 packets/sec SHA1: 2107c313d8ac4634fd4552f3809c15c7f4d4550d RIPEMD160: 8358815a4a5db76a1a862a1375bc413a21b4fe16 MD5: e8f6ae140d2905f9b313e114951158b7 Strict time order: True [root@emachine shm]#

As a validity check on the integrity of the netsniff-ng generated pcap file, the capinfo utility was used. The data and packet rate calculation results from the capinfos utility above also validates the results from the NST Network Interface Bandwidth Monitor application.

iperf: TCP/IP 1514 Byte Packets

Receiver Segmentation Offloading On

In this section we will demonstrate the use of the iperf packet generator tool to produce a Gigabit Ethernet TCP/IP stream of packets at the maximum possible data rate of "117 MiB/s". The iperf server side was first run on the shopper2 NST probe which is depicted below.

------------------------------------------------------------ Server listening on TCP port 5001 TCP window size: 85.3 KByte (default) ------------------------------------------------------------ [ 4] local 192.168.4.101 port 5001 connected with 192.168.4.100 port 42002 [ ID] Interval Transfer Bandwidth [ 4] 0.0-10.0 sec 1.10 GBytes 941 Mbits/sec

On the emachine, the iperf client side, iperf is run for is default duration of 10 seconds. The results of "942 Mbit/sec" agrees with the theoretical maximum Gigabit Ethernet using TCP/IP packets of "117,685,306 Bytes/sec (941.482448 Mbit/sec)".

------------------------------------------------------------ Client connecting to 192.168.4.101, TCP port 5001 TCP window size: 16.0 KByte (default) ------------------------------------------------------------ [ 3] local 192.168.4.100 port 42002 connected with 192.168.4.101 port 5001 [ ID] Interval Transfer Bandwidth [ 3] 0.0-10.0 sec 1.10 GBytes 942 Mbits/sec [root@emachine tmp]#

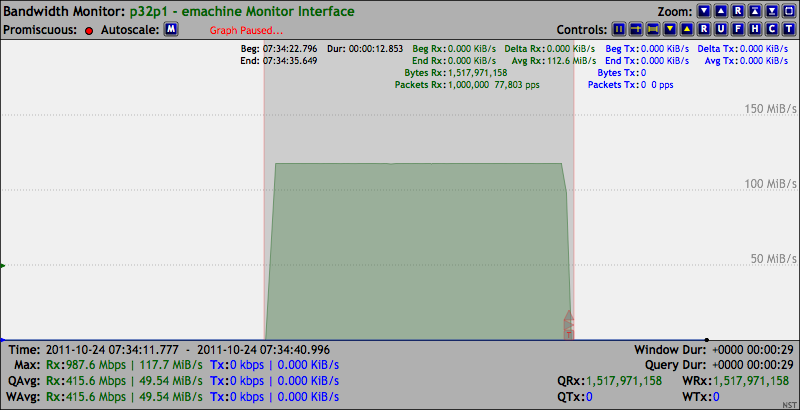

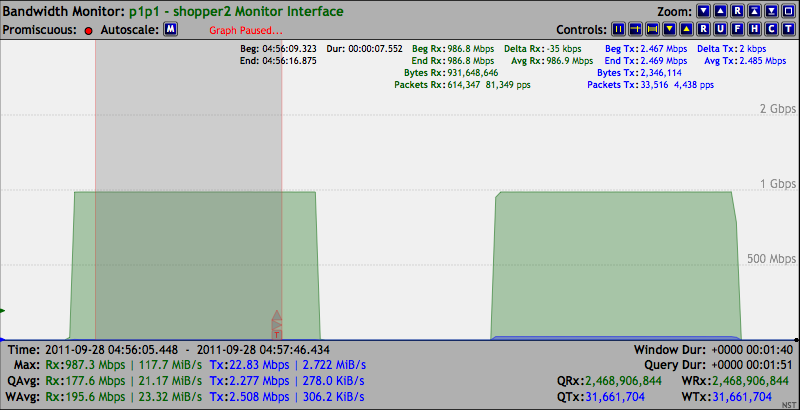

The Bandwidth Monitoring graph shown below contains two (2) iperf sessions. The left side is the current results. The right side is another iperf TCP/IP packet generation session with Receiver Segmentation Offloading: Disabled. This is discussed in the next section. The maximum Gigabit Ethernet data rate using TCP/IP packets is shown on the graph at "117.7 MiB/s".

By default, the Linux Receiver Segmentation Offloading was on (i.e., generic-receive-offload: on) as shown below from the output of the "ethtool" utility. The effect of this feature is to internally produce "Jumbo Frames" or "Super Jumbo Frames" for supported NIC hardware during high TCP/IP data bandwidth rates. These frames are then presented to the Linux network protocol stack. In our case, the Intel Gigabit Ethernet Controller: "82574L" does support Segmentation Offloading and Jumbo Frames.

The netsniff-ng protocol analyzer was also used during the iperf packet generation session to capture the first "20 TCP/IP packets". The results indicate that Jumbo Frames were actually produced internally (i.e., In Kernel space only, Not on the wire.). One can see multiple TCP/IP packets with an Ethernet frame size of "5858 Bytes". Since larger TCP/IP payload sizes are being used, a decrease in the number of TCP/IP "Acknowledgement" packets need to be sent back to the transmitter side. This is one of the benefits of using Receiver Segmentation Offloading.

Offload parameters for p1p1: rx-checksumming: on tx-checksumming: on scatter-gather: on tcp-segmentation-offload: on udp-fragmentation-offload: off generic-segmentation-offload: on generic-receive-offload: on large-receive-offload: off rx-vlan-offload: on tx-vlan-offload: on ntuple-filters: off receive-hashing: off [root@shopper2 shm]#

netsniff-ng 0.5.6.0 RX: 238.41 MB, 122064 Frames each 2048 Byte allocated OUI UDP TCP ETH PROMISC BPF: (000) ret #-1 MD: RX SCATTER/GATHER < 3 74 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.100/192.168.4.101 Len 60 TCP commplex-link [0m 42509/5001 F SYN Win 14600 S/A 0x638d9a23/0x0 > 3 74 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.101/192.168.4.100 Len 60 TCP commplex-link [0m 5001/42509 F SYN ACK Win 14480 S/A 0x59bd8a33/0x638d9a24 < 3 66 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.100/192.168.4.101 Len 52 TCP commplex-link [0m 42509/5001 F ACK Win 913 S/A 0x638d9a24/0x59bd8a34 < 3 90 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.100/192.168.4.101 Len 76 TCP commplex-link [0m 42509/5001 F PSH ACK Win 913 S/A 0x638d9a24/0x59bd8a34 > 3 66 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.101/192.168.4.100 Len 52 TCP commplex-link [0m 5001/42509 F ACK Win 114 S/A 0x59bd8a34/0x638d9a3c < 3 2962 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.100/192.168.4.101 Len 2948 TCP commplex-l ink 42509/5001 F ACK Win 913 S/A 0x638d9a3c/0x59bd8a34 > 3 66 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.101/192.168.4.100 Len 52 TCP commplex-link [0m 5001/42509 F ACK Win 136 S/A 0x59bd8a34/0x638da58c < 3 5858 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.100/192.168.4.101 Len 5844 TCP commplex-l ink 42509/5001 F ACK Win 913 S/A 0x638da58c/0x59bd8a34 > 3 66 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.101/192.168.4.100 Len 52 TCP commplex-link [0m 5001/42509 F ACK Win 159 S/A 0x59bd8a34/0x638dbc2c < 3 4410 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.100/192.168.4.101 Len 4396 TCP commplex-l ink 42509/5001 F ACK Win 913 S/A 0x638dbc2c/0x59bd8a34 > 3 66 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.101/192.168.4.100 Len 52 TCP commplex-link [0m 5001/42509 F ACK Win 181 S/A 0x59bd8a34/0x638dcd24 < 3 1514 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.100/192.168.4.101 Len 1500 TCP commplex-l ink 42509/5001 F ACK Win 913 S/A 0x638dcd24/0x59bd8a34 > 3 66 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.101/192.168.4.100 Len 52 TCP commplex-link [0m 5001/42509 F ACK Win 204 S/A 0x59bd8a34/0x638dd2cc < 3 5858 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.100/192.168.4.101 Len 5844 TCP commplex-l ink 42509/5001 F PSH ACK Win 913 S/A 0x638dd2cc/0x59bd8a34 < 3 1514 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.100/192.168.4.101 Len 1500 TCP commplex-l ink 42509/5001 F ACK Win 913 S/A 0x638de96c/0x59bd8a34 > 3 66 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.101/192.168.4.100 Len 52 TCP commplex-link [0m 5001/42509 F ACK Win 227 S/A 0x59bd8a34/0x638de96c > 3 66 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.101/192.168.4.100 Len 52 TCP commplex-link [0m 5001/42509 F ACK Win 249 S/A 0x59bd8a34/0x638def14 < 3 4410 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.100/192.168.4.101 Len 4396 TCP commplex-l ink 42509/5001 F ACK Win 913 S/A 0x638def14/0x59bd8a34 > 3 66 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.101/192.168.4.100 Len 52 TCP commplex-link [0m 5001/42509 F ACK Win 272 S/A 0x59bd8a34/0x638e000c < 3 5858 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.100/192.168.4.101 Len 5844 TCP commplex-l ink 42509/5001 F ACK Win 913 S/A 0x638e000c/0x59bd8a34 30 frames incoming 30 frames passed filter 0 frames failed filter (out of space) [root@shopper2 shm]#

Receiver Segmentation Offloading Off

The iperf session will be run again only this time the Receiver Segmentation Offloading will be disabled on the shopper2 system receiver side. The "ethtool" utility with the following options: "-K p1p1 gro off" is used to disable Receiver Segmentation Offloading (i.e., generic-receive-offload: off). Now Jumbo Frames will Not be produced.

Offload parameters for p1p1: rx-checksumming: on tx-checksumming: on scatter-gather: on tcp-segmentation-offload: on udp-fragmentation-offload: off generic-segmentation-offload: on generic-receive-offload: off large-receive-offload: off rx-vlan-offload: on tx-vlan-offload: on ntuple-filters: off receive-hashing: off [root@shopper2 shm]#

------------------------------------------------------------ Server listening on TCP port 5001 TCP window size: 85.3 KByte (default) ------------------------------------------------------------ 5] local 192.168.4.101 port 5001 connected with 192.168.4.100 port 42011 [ ID] Interval Transfer Bandwidth [ 5] 0.0-10.0 sec 1.10 GBytes 941 Mbits/sec

The results again show that the maximum Gigabit Ethernet rate using TCP/IP packets was reached: "942 Mbit/sec".

------------------------------------------------------------ Client connecting to 192.168.4.101, TCP port 5001 TCP window size: 16.0 KByte (default) ------------------------------------------------------------ [ 3] local 192.168.4.100 port 42002 connected with 192.168.4.101 port 5001 [ ID] Interval Transfer Bandwidth [ 3] 0.0-10.0 sec 1.10 GBytes 942 Mbits/sec [root@emachine tmp]#

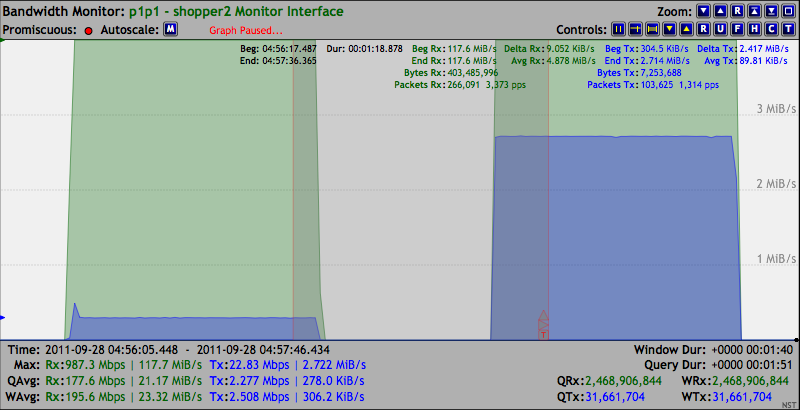

The Bandwidth Monitor results are shown again only now with data rate units displayed in "Bits per Second". Remember, two (2) iperf sessions are shown on the graph. The left side session is with Receiver Segmentation Offloading: On and the right side session is with Receiver Segmentation Offloading: Off.

The Bandwidth Monitor graph below has its "Rate Scale" manually decreased so that the Transmit Data Rate graph is visually amplified. What is most apparent is the Increase in the number of TCP/IP "Acknowledgement" packets needed to be sent back to the transmitter. This number increased by almost an order of magnitude and is due to not allowing the TCP/IP payload size to exceed the 1500 MTU byte limit (i.e., Receiver Segmentation Offloading: Off). Since more TCP/IP packets are received, a greater number of "Acknowledgement" packets need to be generated to satisfy the TCP/IP protocol's guaranteed orderly packet delivery mechanism.

The netsniff-ng protocol analyzer was again used during the iperf packet generation session to capture the first "20 TCP/IP packets". The results below indicate that No Jumbo Frames were produced when the Receiver Segmentation Offloading is disabled. The maximum Gigabit Ethernet frame size was limited to "1514 Bytes" (i.e., The standard Ethernet 1500 Byte MTU payload size).

netsniff-ng 0.5.6.0 RX: 238.41 MB, 122064 Frames each 2048 Byte allocated OUI UDP TCP ETH PROMISC BPF: (000) ret #-1 MD: RX SCATTER/GATHER < 3 74 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.100/192.168.4.101 Len 60 TCP commplex-link 42658/5 001 F SYN Win 14600 S/A 0x674760e6/0x0 > 3 74 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.101/192.168.4.100 Len 60 TCP commplex-link 5001/42 658 F SYN ACK Win 14480 S/A 0xb8e508c9/0x674760e7 < 3 66 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.100/192.168.4.101 Len 52 TCP commplex-link 42658/5 001 F ACK Win 913 S/A 0x674760e7/0xb8e508ca < 3 90 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.100/192.168.4.101 Len 76 TCP commplex-link 42658/5 001 F PSH ACK Win 913 S/A 0x674760e7/0xb8e508ca > 3 66 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.101/192.168.4.100 Len 52 TCP commplex-link 5001/42 658 F ACK Win 114 S/A 0xb8e508ca/0x674760ff < 3 1514 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.100/192.168.4.101 Len 1500 TCP commplex-link 426 58/5001 F ACK Win 913 S/A 0x674760ff/0xb8e508ca > 3 66 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.101/192.168.4.100 Len 52 TCP commplex-link 5001/42 658 F ACK Win 136 S/A 0xb8e508ca/0x674766a7 < 3 1514 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.100/192.168.4.101 Len 1500 TCP commplex-link 426 58/5001 F ACK Win 913 S/A 0x674766a7/0xb8e508ca > 3 66 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.101/192.168.4.100 Len 52 TCP commplex-link 5001/42 658 F ACK Win 159 S/A 0xb8e508ca/0x67476c4f < 3 1514 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.100/192.168.4.101 Len 1500 TCP commplex-link 426 58/5001 F ACK Win 913 S/A 0x67476c4f/0xb8e508ca > 3 66 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.101/192.168.4.100 Len 52 TCP commplex-link 5001/42 658 F ACK Win 181 S/A 0xb8e508ca/0x674771f7 < 3 1514 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.100/192.168.4.101 Len 1500 TCP commplex-link 426 58/5001 F ACK Win 913 S/A 0x674771f7/0xb8e508ca > 3 66 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.101/192.168.4.100 Len 52 TCP commplex-link 5001/42 658 F ACK Win 204 S/A 0xb8e508ca/0x6747779f < 3 1514 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.100/192.168.4.101 Len 1500 TCP commplex-link 426 58/5001 F ACK Win 913 S/A 0x6747779f/0xb8e508ca > 3 66 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.101/192.168.4.100 Len 52 TCP commplex-link 5001/42 658 F ACK Win 227 S/A 0xb8e508ca/0x67477d47 < 3 1514 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.100/192.168.4.101 Len 1500 TCP commplex-link 426 58/5001 F ACK Win 913 S/A 0x67477d47/0xb8e508ca > 3 66 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.101/192.168.4.100 Len 52 TCP commplex-link 5001/42 658 F ACK Win 249 S/A 0xb8e508ca/0x674782ef < 3 1514 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.100/192.168.4.101 Len 1500 TCP commplex-link 426 58/5001 F ACK Win 913 S/A 0x674782ef/0xb8e508ca > 3 66 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.101/192.168.4.100 Len 52 TCP commplex-link 5001/42 658 F ACK Win 272 S/A 0xb8e508ca/0x67478897 < 3 1514 Intel Corporate => Intel Corporate IPv4 IPv4 192.168.4.100/192.168.4.101 Len 1500 TCP commplex-link 426 58/5001 F ACK Win 913 S/A 0x67478897/0xb8e508ca 78 frames incoming 78 frames passed filter 0 frames failed filter (out of space) [root@shopper2 shm]#

In summary, we have shown that the use of Receiver Segmentation Offloading will help reduce the amount of transmit data on the network by decreasing the required number of Acknowledgement packets needed during high TCP/IP traffic workloads by the creation of internally produced "Jumbo Ethernet Frames".

Maximum Gigabit Ethernet Frame Rate Measurement

The UDP network protocol will be used to show a best effort approach on how to generate, capture and monitor maximum Gigabit Ethernet frame rates. The effects of using " Ethernet Flow Control Pause Frames" will also be demonstrated. Both pktgen and the trafgen tool will be used to generate the network packet stream.

- Specifications of the NIC hardware: Is the system bus connector I/O capable of Gigabit Ethernet frame rate throughput? Our NIC adapters used the PCIe bus interface.

- Location of the NIC adapter on the system motherboard: Our experience resulted in significantly different measurement values by locating the NIC adapter in different PCIe slots. Since we did not have schematics for the system motherboard, this was a trial and error effort.

- Network topology: Is your network Gigabit switch capable of switching Ethernet frames at the maximum rate? One may need to use a direct LAN connection via Auto-MDIX or a LAN Crossover Cable.

- Is Ethernet flow control being used? The "ethtool -a <Net Interface>" utility can be used to determine this.

Performance Tweaks References:

pktgen: UDP 60 Byte Packets

In this section we will demonstrate the use of the pktgen packet generator tool to produce a Gigabit Ethernet UDP stream of packets at the maximum possible Gigabit Ethernet frame rate. pktgen is our best tool for the job since it mostly runs in Kernel space. pktgen is executed on the shopper2 NST probe using network interface: "p1p1". The same interface will be used to monitor the UDP stream with the NST Network Interface Bandwidth Monitor. The reason we are using the same system to generate and monitor this high frame rate measurement is because there is considerable more system resources needed in the reception and identification of the network traffic than the generation of it.

We will use three (3) pktgen sessions with the following Ethernet Flow Control conditions:

- Session: 1 Ethernet flow control pause frames are enabled.

- Session: 2 Ethernet flow control pause frames are disabled on the generator side.

- Session: 3 Ethernet flow control pause frames are disabled on both the generator and receiver side.

The ethtool is used below to confirm that Ethernet Flow Control is enabled for network interface: "p1p1" on the shopper2 packet generation system.

Pause parameters for p1p1: Autonegotiate: on RX: on TX: on

The ethtool is used below to confirm that Ethernet Flow Control is enabled for network interface: "p32p1" on the emachine receiver system.

Pause parameters for p32p1: Autonegotiate: on RX: on TX: on

The first session using pktgen via the NST script: "/usr/share/pktgen/pktgen-nst.sh" is shown below. A total of "4,000,000 UDP" packets were generated at a size of "60 Bytes" each. The maximum Gigabit Ethernet frame rate that was generated from the output of pktgen was "828,853 pps" with a data rate of "49.625 MB/sec (47.326 MiB/sec)".

2011-11-01 08:02:25 Using pktgen configuration file: "/usr/share/pktgen/pktgen-nst.conf"

The linux kernel module: "pktgen" is already loaded.

Reset values for all running "pktgen" kernel threads.

Pktgen command: Control: "/proc/net/pktgen/pgctrl" Cmd: "reset"

Device/pktgen kernel thread affinity mapping: "one-to-one"

Removing all network interface devices from pktgen kernel thread: "kpktgend_0"

Pktgen command: Thread: "/proc/net/pktgen/kpktgend_0" Cmd: "rem_device_all"

Setting network interface device: "p1p1" to "Up" state.

Adding network interface device: "p1p1" to kernel thread: "kpktgend_0"

Pktgen command: Thread: "/proc/net/pktgen/kpktgend_0" Cmd: "add_device p1p1"

Pktgen command: Device: "/proc/net/pktgen/p1p1" Cmd: "clone_skb 1000000"

Pktgen command: Device: "/proc/net/pktgen/p1p1" Cmd: "count 4000000"

Pktgen command: Device: "/proc/net/pktgen/p1p1" Cmd: "delay 0"

Pktgen command: Device: "/proc/net/pktgen/p1p1" Cmd: "pkt_size 60"

Pktgen command: Device: "/proc/net/pktgen/p1p1" Cmd: "src_min 192.168.4.101"

Pktgen command: Device: "/proc/net/pktgen/p1p1" Cmd: "src_max 192.168.4.101"

Pktgen command: Device: "/proc/net/pktgen/p1p1" Cmd: "udp_src_min 6000"

Pktgen command: Device: "/proc/net/pktgen/p1p1" Cmd: "udp_src_max 6000"

Pktgen command: Device: "/proc/net/pktgen/p1p1" Cmd: "dst_mac 00:1B:21:9A:1F:40"

Pktgen command: Device: "/proc/net/pktgen/p1p1" Cmd: "dst_min 192.168.4.100"

Pktgen command: Device: "/proc/net/pktgen/p1p1" Cmd: "dst_max 192.168.4.100"

Pktgen command: Device: "/proc/net/pktgen/p1p1" Cmd: "udp_dst_min 123"

Pktgen command: Device: "/proc/net/pktgen/p1p1" Cmd: "udp_dst_max 123"

Pktgen '/proc' Directory Listing: "/proc/net/pktgen"

=================================================================================

-rw------- 1 root root 0 Nov 1 08:02 /proc/net/pktgen/kpktgend_0

-rw------- 1 root root 0 Nov 1 08:02 /proc/net/pktgen/kpktgend_1

-rw------- 1 root root 0 Nov 1 08:02 /proc/net/pktgen/kpktgend_2

-rw------- 1 root root 0 Nov 1 08:02 /proc/net/pktgen/kpktgend_3

-rw------- 1 root root 0 Nov 1 08:02 /proc/net/pktgen/p1p1

-rw------- 1 root root 0 Nov 1 08:02 /proc/net/pktgen/pgctrl

Type: 'Ctrl-c' to stop...

***Started: Tue Nov 01 08:02:25 EDT 2011

Pktgen command: Control: "/proc/net/pktgen/pgctrl" Cmd: "start"

***Stopped: Tue Nov 01 08:02:30 EDT 2011 Duration: +0000 00:00:05

Pktgen - Packet/Data Rate Results:

=================================================================================

Net Interface: p1p1 Rate: 828853pps 397Mb/sec (397849440bps) errors: 0

Rate: 49.625MB/sec

Duration: 4825944usec (4.826secs)

Bytes Sent: 240000000 (240.000MBytes)

Packets Sent: 4000000 (4.000MPkts)

---------------------------------------------------------------------------------

Totals: Packets Sent: 4000000 (4.000MPkts) Bytes Sent: 240000000 (240.000MBytes)

Max Packet Rate: 828853pps 828.853Kpkts/sec (Duration: 4.826secs)

Max Bit Rate: 397Mb/sec

Max Byte Rate: 49.625MB/sec

[root@shopper2 tmp]#

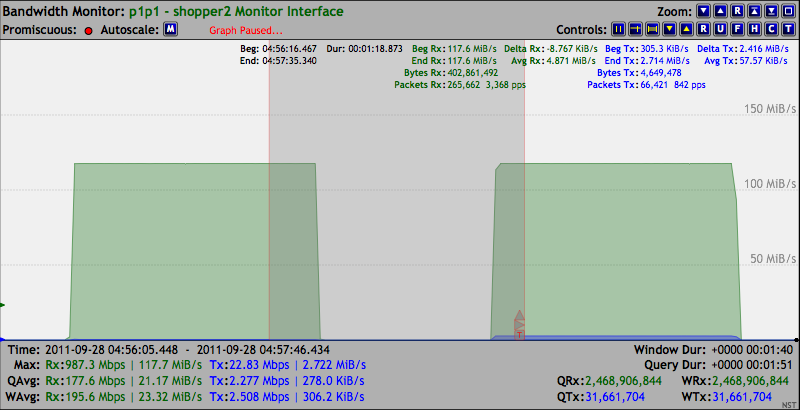

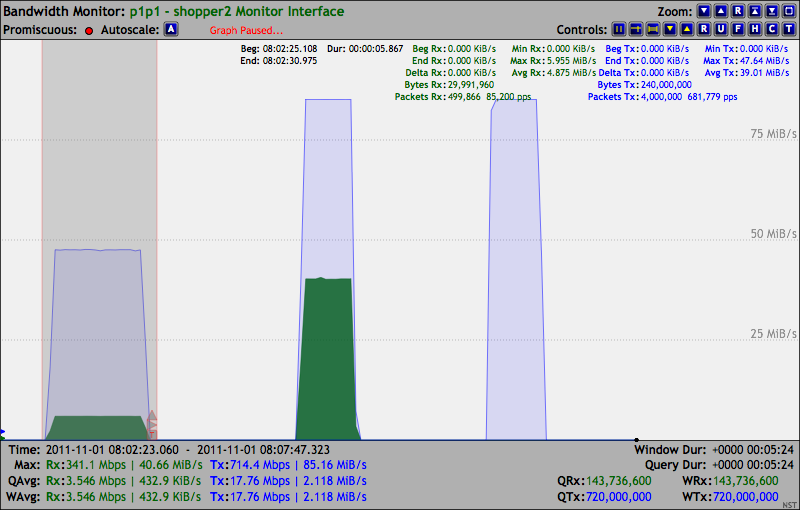

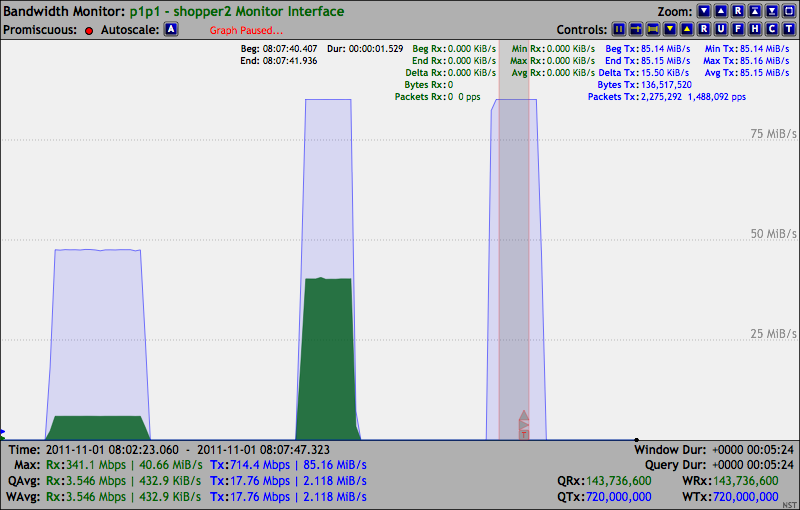

The Bandwidth Monitor graph below also shows the results from the three (3) pktgen sessions. The left most side corresponds to the first session. Results from the Ruler Measurement tool agree with the pktgen results in the above depiction. Clearly the effect from Ethernet Flow Control issued by the emachine receiver system has limited the maximum generated Gigabit Ethernet frame rate. One can all see that some Ethernet Pause Control Frames have been passed from the NIC controller to the network protocol stack. This was confirmed by capturing and decoding packets during a separate generation session. A plausible cause for this may be due to the high framing rate in which the NIC controller can only process a limited number of Ethernet Pause Control Frames. Excessive Ethernet Pause Control Frames are then passed on to the network protocol stack.

Ruler Measurement Tool Highlight (Tx Traffic): Maximum Rate: 47.64 MiB/s Packets: 4,000,000.

***Note: Some Ethernet Pause Control Frames sent from the receiver emachine system are visible to the Linux Network Protocol stack.

With the second pktgen session we will Disable the Ethernet Flow Control for the shopper2 system generator side. Once again the ethtool is used to accomplish this and is also used for verification.

Pause parameters for p1p1: Autonegotiate: off RX: off TX: off

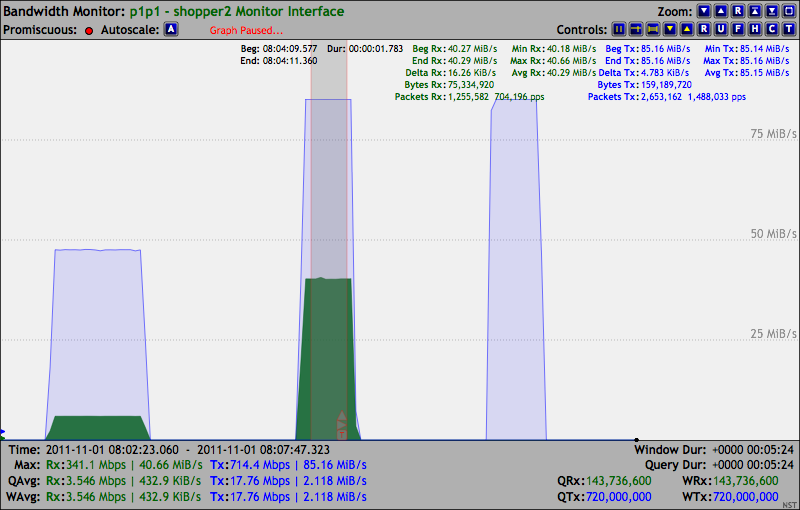

The middle section of the Bandwidth Monitor graph shown below visualizes the results for the second session. The Ruler Measurement tool also highlights these results. By disabling the Ethernet Flow Control, one can see that the Pause Frames from the emachine receiver system are now passed through to the Linux network protocol stack on shopper2. A decode of an Ethernet Pause Frame can be found here. Since the network interface: "p1p1" on shopper2 did not have to periodically pause, its Gigabit Ethernet frame rate increased to near the theoretical value: 1,488,033 pps.

Ruler Measurement Tool Highlight (Tx Traffic): 85.15 MiB/s Packets: 2,653,162 1,488,033 pps.

***Note: All Ethernet Pause Control Frames sent from the receiver emachine system are now visible to the Linux Network Protocol stack.

With the third pktgen session we will Disable the Ethernet Flow Control for both the shopper2 system generator side and the emachine receiver side as shown below.

Pause parameters for p1p1: Autonegotiate: off RX: off TX: off

The third session using pktgen via the NST script: "/usr/share/pktgen/pktgen-nst.sh" is shown below. A total of "4,000,000 UDP" packets were generated at a size of "60 Bytes" each. The maximum Gigabit Ethernet frame rate that was generated from the output of pktgen was "1,488,191 pps" with a data rate of "89.250 MB/sec (85.115 MiB/sec)". This is the highest packet rate that we could achieve with our system / network configuration which has reach the maximum theoretical Gigabit Ethernet framing rate.

2011-11-01 08:07:39 Using pktgen configuration file: "/usr/share/pktgen/pktgen-nst.conf"

The linux kernel module: "pktgen" is already loaded.

Reset values for all running "pktgen" kernel threads.

Pktgen command: Control: "/proc/net/pktgen/pgctrl" Cmd: "reset"

Device/pktgen kernel thread affinity mapping: "one-to-one"

Removing all network interface devices from pktgen kernel thread: "kpktgend_0"

Pktgen command: Thread: "/proc/net/pktgen/kpktgend_0" Cmd: "rem_device_all"

Setting network interface device: "p1p1" to "Up" state.

Adding network interface device: "p1p1" to kernel thread: "kpktgend_0"

Pktgen command: Thread: "/proc/net/pktgen/kpktgend_0" Cmd: "add_device p1p1"

Pktgen command: Device: "/proc/net/pktgen/p1p1" Cmd: "clone_skb 1000000"

Pktgen command: Device: "/proc/net/pktgen/p1p1" Cmd: "count 4000000"

Pktgen command: Device: "/proc/net/pktgen/p1p1" Cmd: "delay 0"

Pktgen command: Device: "/proc/net/pktgen/p1p1" Cmd: "pkt_size 60"

Pktgen command: Device: "/proc/net/pktgen/p1p1" Cmd: "src_min 192.168.4.101"

Pktgen command: Device: "/proc/net/pktgen/p1p1" Cmd: "src_max 192.168.4.101"

Pktgen command: Device: "/proc/net/pktgen/p1p1" Cmd: "udp_src_min 6000"

Pktgen command: Device: "/proc/net/pktgen/p1p1" Cmd: "udp_src_max 6000"

Pktgen command: Device: "/proc/net/pktgen/p1p1" Cmd: "dst_mac 00:1B:21:9A:1F:40"

Pktgen command: Device: "/proc/net/pktgen/p1p1" Cmd: "dst_min 192.168.4.100"

Pktgen command: Device: "/proc/net/pktgen/p1p1" Cmd: "dst_max 192.168.4.100"

Pktgen command: Device: "/proc/net/pktgen/p1p1" Cmd: "udp_dst_min 123"

Pktgen command: Device: "/proc/net/pktgen/p1p1" Cmd: "udp_dst_max 123"

Pktgen '/proc' Directory Listing: "/proc/net/pktgen"

=================================================================================

-rw------- 1 root root 0 Nov 1 08:07 /proc/net/pktgen/kpktgend_0

-rw------- 1 root root 0 Nov 1 08:07 /proc/net/pktgen/kpktgend_1

-rw------- 1 root root 0 Nov 1 08:07 /proc/net/pktgen/kpktgend_2

-rw------- 1 root root 0 Nov 1 08:07 /proc/net/pktgen/kpktgend_3

-rw------- 1 root root 0 Nov 1 08:07 /proc/net/pktgen/p1p1

-rw------- 1 root root 0 Nov 1 08:07 /proc/net/pktgen/pgctrl

Type: 'Ctrl-c' to stop...

***Started: Tue Nov 01 08:07:39 EDT 2011

Pktgen command: Control: "/proc/net/pktgen/pgctrl" Cmd: "start"

***Stopped: Tue Nov 01 08:07:42 EDT 2011 Duration: +0000 00:00:03

Pktgen - Packet/Data Rate Results:

=================================================================================

Net Interface: p1p1 Rate: 1488191pps 714Mb/sec (714331680bps) errors: 0

Rate: 89.250MB/sec

Duration: 2687825usec (2.688secs)

Bytes Sent: 240000000 (240.000MBytes)

Packets Sent: 4000000 (4.000MPkts)

---------------------------------------------------------------------------------

Totals: Packets Sent: 4000000 (4.000MPkts) Bytes Sent: 240000000 (240.000MBytes)

Max Packet Rate: 1488191pps 1488.191Kpkts/sec (Duration: 2.688secs)

Max Bit Rate: 714Mb/sec

Max Byte Rate: 89.250MB/sec

[root@shopper2 tmp]#

The right section of the Bandwidth Monitor graph shown below agrees with the pktgen results above. The Ruler Measurement tool also highlights these results. By disabling the Ethernet Flow Control entirely for both the generator and the receiver the Gigabit Ethernet frame generated rate achieved a Gigabit Ethernet framing rate of 1,488,092 pps.

Ruler Measurement Tool Highlight (Tx Traffic): 85.15 MiB/s Packets: 2,275,292 1,488,092 pps.

trafgen: UDP 60 Byte Packets

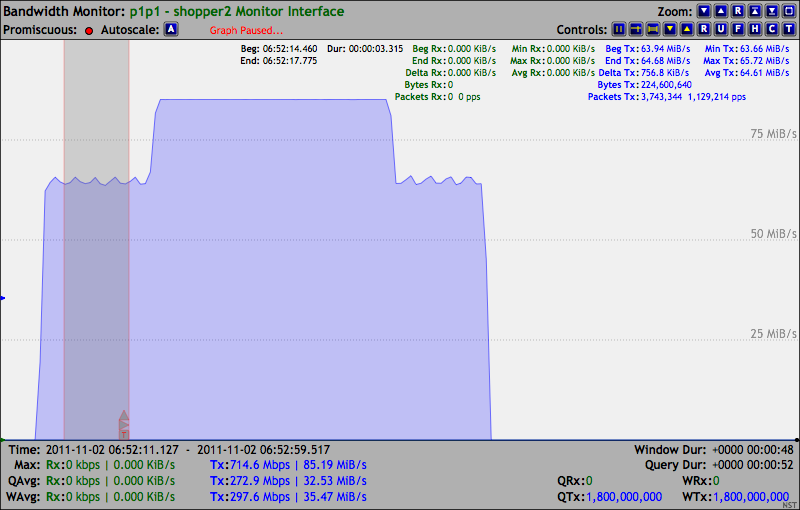

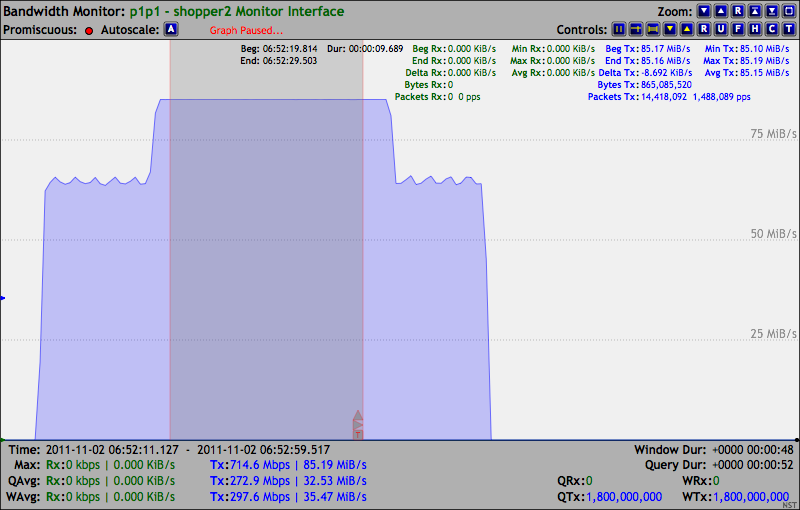

In this section we will demonstrate the use of running multiple instances of the trafgen packet generator tool to produce a Gigabit Ethernet UDP stream of packets at the maximum possible Gigabit Ethernet frame rate. In order to achieve the maximum rate, we will need to run two (2) separate instances of trafgen each bound to a separate CPU core. We used the NST bundled trafgen configuration file: "/etc/netsniff-ng/trafgen/nst_udp_pkt_18.txf" which contains a UDP 60 Byte packet size. We picked the first instance to generate 20,000,000 packets and the second instance to generate 10,000,000 packets so that the Gigabit Ethernet framing rate could be easily measured and presented by the NST Bandwidth Monitor. The graph monitor query rate was configured for 4 updates per second (250 msecs).

The output from running the first instance of trafgen is shown below. This instance was configured to generated 20,000,000 packets and was bound to CPU core: "0".

trafgen 0.5.6.0 CFG: n 20000000, gap 0 us, pkts 1 [0] pkt len 60 cnts 0 rnds 0 payload ff ff ff ff ff ff fe 00 00 00 00 00 08 00 45 00 00 2e 12 34 40 00 ff 11 df 70 c0 a8 04 64 c0 a8 04 65 07 d0 07 d1 00 1a d2 62 41 42 43 44 45 46 47 48 49 4a 4b 4c 4d 4e 4f 50 51 52 TX: 238.41 MB, 122064 Frames each 2048 Byte allocated IRQ: p1p1:55 > CPU0 MD: FIRE RR 10us Running! Hang up with ^C! 20000000 frames outgoing 1200000000 bytes outgoing

From the results of the Network Bandwidth Monitor graph depicted below, this trafgen instance by itself could only produce an average sustained packet rate of about 1,129,214 pps (64.61 MiB/sec).

Ethernet Pause Control Disabled: Transmitter and Receiver

Ruler Measurement Tool Highlight (Tx Traffic): 64.61 MiB/s Packets: 3,743,344 1,129,214 pps.

Next we simultaneously introduced a second instance of trafgen which was configured to generated 10,000,000 packets and was bound to CPU core: "1".

trafgen 0.5.6.0 CFG: n 10000000, gap 0 us, pkts 1 [0] pkt len 60 cnts 0 rnds 0 payload ff ff ff ff ff ff fe 00 00 00 00 00 08 00 45 00 00 2e 12 34 40 00 ff 11 df 70 c0 a8 04 64 c0 a8 04 65 07 d0 07 d1 00 1a d2 62 41 42 43 44 45 46 47 48 49 4a 4b 4c 4d 4e 4f 50 51 52 TX: 238.41 MB, 122064 Frames each 2048 Byte allocated IRQ: p1p1:55 > CPU1 MD: FIRE RR 10us Running! Hang up with ^C! 10000000 frames outgoing 600000000 bytes outgoing

The highlighted results from using two (2) simultaneous instances of trafgen is shown by the Ruler Measurement tool below. A near maximum Gigabit Ethernet framing rate was achieved: 1,488,089 pps (85.15 MiB/s).

Ethernet Pause Control Disabled: Transmitter and Receiver

Ruler Measurement Tool Highlight (Tx Traffic): 85.15 MiB/s Packets: 14,418,092 1,488,089 pps.

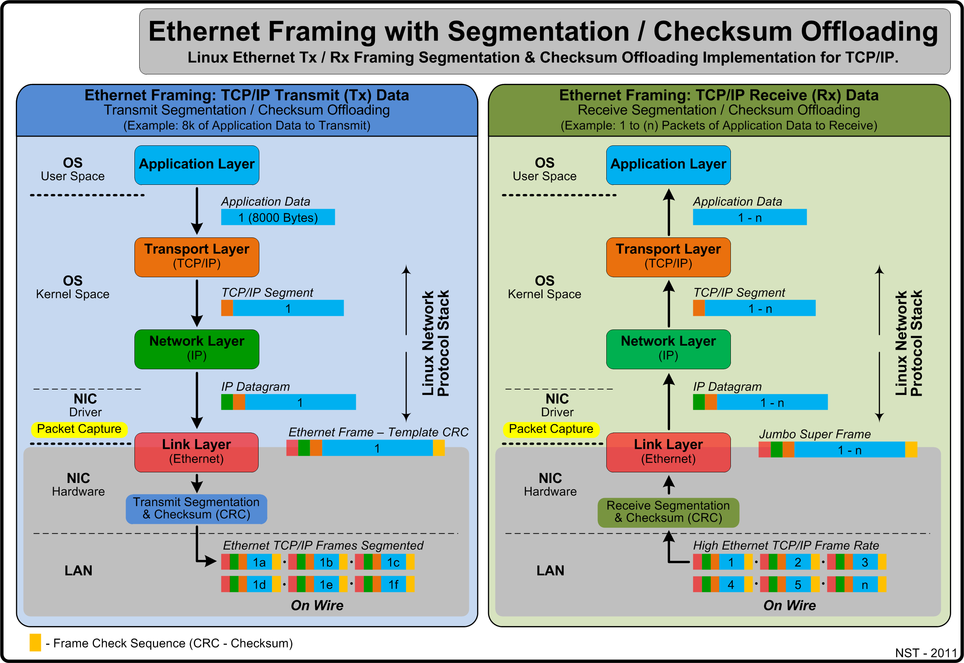

Ethernet Framing - Segmentation & Checksum (CRC) Offloading

Segmentation Offloading is a technology used in NIC hardware to offload processing a portion of the TCP/IP stack to the network controller. It is primarily used with high-speed network interfaces, such as Gigabit Ethernet and 10 Gigabit Ethernet, where processing overhead of the Linux network protocol stack becomes significant. Similarly, Checksum Offloading defers the CRC Checksum calculation to the NIC hardware. One can view the "Offloading" parameters and values for a particular network interface using the ethtool utility. The example below is for network interface: "p1p1" on the shopper2 NST system:

Offload parameters for p1p1: rx-checksumming: on tx-checksumming: on scatter-gather: on tcp-segmentation-offload: on udp-fragmentation-offload: off generic-segmentation-offload: on generic-receive-offload: on large-receive-offload: off rx-vlan-offload: on tx-vlan-offload: on ntuple-filters: off receive-hashing: off [root@shopper2 ~]#

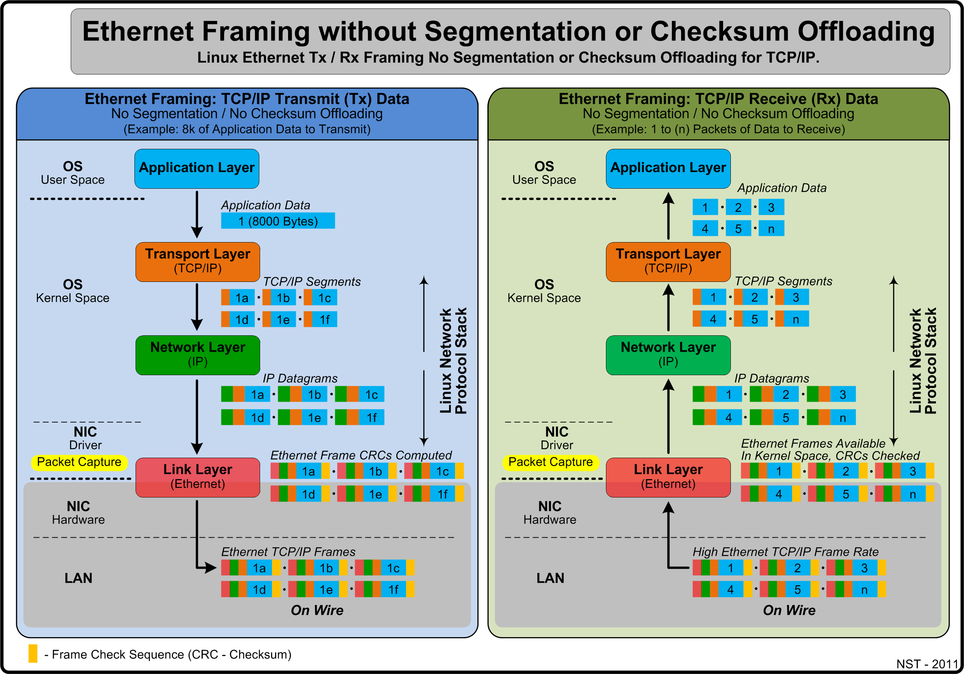

Without Segmentation & Checksum (CRC) Offloading

The left side of the diagram below (i.e., Transmit Data Side) illustrates the flow of sending "8000 Bytes" of application data using TCP/IP. The data is first segmented into six (6) TCP data sizes up to the maximum of "1460 Bytes" each. This was derived from the following: The Maximum Transmission Unit (MTU) for Ethernet is "1500 Bytes" and if "20 Bytes" are subtracted for the IP Header and another "20 Bytes" for the TCP Header there are "1460 Bytes" remaining for data in the TCP segment. This is know as the TCP Maximum Segment Size (MSS). "8000 Bytes" can be properly segmented into six (6) maximum sized TCP/IP segments. These segments are then sent through the network layer of the Linux network protocol stack and on to the NIC driver to complete the construction of each Ethernet frame.

The right side of the diagram below (i.e., Receive Data Side) details the flow of receiving 1 to (n) TCP/IP packets from the network up through the Linux network protocol stack and finally to the application. No Segmentation Offloading or Checksum Offloading is used for sending and receiving TCP/IP packets. The entire Ethernet framing including the Checksum calculation and verification is done within the Linux Kernel space.

With Segmentation & Checksum (CRC) Offloading & Packet Capture Considerations

The diagram below illustrates the use of Segmentation Offloading and Checksum (CRC) Offloading when sending and receiving application data as TCP/IP packets. The left side of the diagram below (i.e., Transmit Data Side) shows the flow of sending "8000 Bytes" of application data using TCP/IP. The application data is not segmented unless it exceeds the maximum IP length of "64K Bytes". The data is sent through the Linux network protocol stack and a template header for each layer is constructed. This large TCP/IP packet is then passed to the NIC driver for Ethernet framing including template CRCs for TCP and the Ethernet frame.

At this point, if the Ethernet frame exceeds the "1500 Byte" payload, it will be considered a Jumbo or Super Jumbo Ethernet Frame if captured by a protocol analyzer. This transmitted large Ethernet frame does not appear on the wire (i.e., Network) only internally in Kernel space.

- A Linux protocol analyzer captures outgoing packets typically from a network transmit queue in the Kernel space Not from the NIC controller.

- A large transmit Jumbo of Super Jumbo Ethernet Frame can be captured due to "TCP Segmentation Offloading". This frame does not appear on the network only internally in Kernel space.

- Incorrect transmit TCP Checksums may appear in the capture due to "Transmit Checksum Offloading". The correct calculation is deferred to the NIC controller and seen correctly on the wire.

The Ethernet frame is then offloaded to the NIC hardware. The NIC controller performs the necessary TCP/IP segmentation which depends on the configured MTU value and checksum calculations prior to transmitting the frames on the wire. These offloading performance optimizations executed by the NIC controller will reduce the Linux Kernel workload thus providing more Kernel resources to accomplish other tasks.

The right side of the diagram above (i.e., Receive Data Side) details the flow of receiving 1 to (n) TCP/IP packets at a high traffic rate from the wire up through the Linux network protocol stack and finally to the application. In this case Segmentation Offloading and Checksum Offloading is used for receiving TCP/IP packets. At a high traffic rate, the NIC Controller will combine multiple TCP/IP packets using Receiver Segmentation Offloading and present the aggregate Ethernet frame to the Linux network protocol stack. If this frame exceeds the "1500 Byte" payload, it will be considered a Jumbo or Super Jumbo Ethernet Frame if captured by a protocol analyzer. This large received Ethernet frame did not appear on the wire (i.e., Network) only internally in Kernel space.

- A Linux protocol analyzer captures incoming packets typically from a network receive queue in the Kernel space Not from the NIC controller.

- A large receive Jumbo of Super Jumbo Ethernet Frame can be captured due to "TCP Segmentation Offloading" and "Generic Receive Offload". This frame does not appear on the network only internally in Kernel space.

- Incorrect receive TCP Checksums may appear in the capture due to "Receive Checksum Offloading". Each Ethernet frame and TCP/IP segment received on the wire has their Checksum verified by the NIC controller.

Receiver Segmentation Offloading will help reduce the amount of transmit data on the network by decreasing the required number of TCP Acknowledgement packets needed during high TCP/IP traffic workloads by the creation of internally produced "Jumbo Ethernet Frames". See section: " Receiver Segmentation Offloading On" for a demonstration.

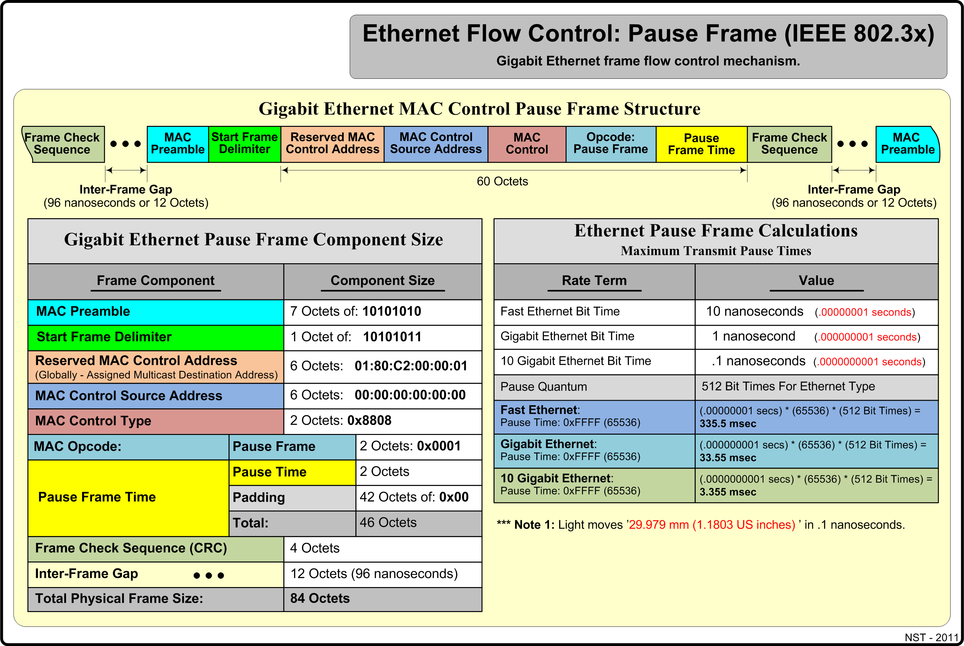

Ethernet Flow Control Pause Frame (IEEE 802.3x)

A transmitting host or network device may generate network traffic faster than the receiving host or device on the link can accept it. To handle this condition, Institute of Electrical and Electronics Engineers (IEEE) developed an Ethernet Flow Control mechanism, using a PAUSE frame. The diagram below details the Ethernet framing structure for a PAUSE frame.

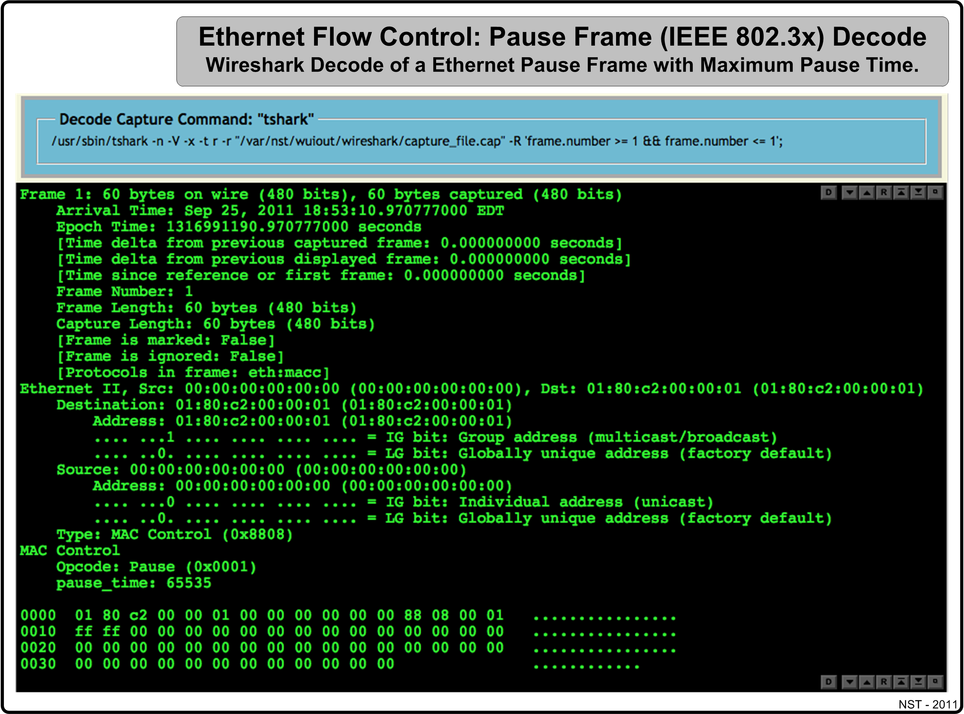

Ethernet Flow Control Decode

The pktgen session: 2 above for maximum frame rates measurements allowed Ethernet Pause frames to be sent up through the Linux network protocol stack. Since they were not processed by the NIC controller, a protocol analyzer can capture and decode them. The protocol analyzer output below was performed by tshark decoding an Ethernet Pause frame with a maximum Pause Time of 0xffff (65536) time units.

Summary

In this Wiki article we used a best effort approach on how to generate, capture and monitor maximum Gigabit Ethernet data and frame rates using commodity based hardware. Useful reference diagrams depicting theoretical network bandwidth rates for minimum and maximum Ethernet payload sizes were presented. Different networking tools included in the NST distribution where used for packet generation and capture. The NST Network Interface Bandwidth Monitor was used to perform many data rate measurements showing its capability for the network designer and administrator.

Segmentation Offloading techniques and Ethernet Flow Control processing by the NIC controller hardware were described and their effects on network performance and capture were shown.